Generative AI tools are amplifying Islamophobic narratives and stereotypes on social media in India.

Images that portray exaggerated features and reinforce Muslim stereotypes are increasingly circulating on major social media platforms in India. These visuals often serve to illustrate or support Hindutva conspiracy theories, raising concerns about their impact on public perceptions of Muslims. A report by the Center for the Study of Organized Hate, released on September 29, highlights how artificial intelligence tools are being utilized to generate such imagery, which can amplify hate and misinformation.

Researchers examined 1,326 AI-generated images and videos shared by 297 public accounts on platforms like X (formerly Twitter), Facebook, and Instagram between May 2023 and May 2025. The study underscores the ease with which these tools, such as Midjourney, Stable Diffusion, and DALL·E, can produce content that fuels Islamophobia. The report notes that the potential for AI technologies to access the Indian market, particularly with subscription plans priced affordably, makes this analysis urgent.

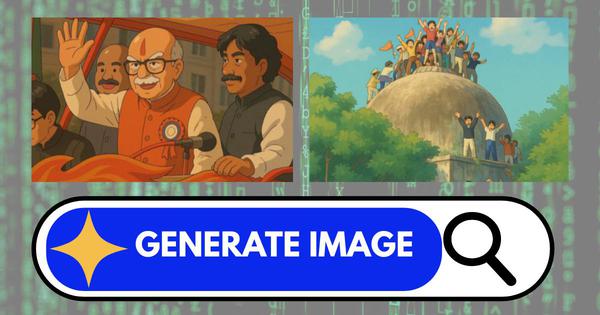

The report indicates that these images, which are often vivid and realistic, have been used by pro-Hindutva media organizations, particularly as thumbnails for YouTube videos or accompanying articles. The content analyzed garnered over 27.3 million engagements, mainly on X, suggesting a significant impact in terms of reach and influence. The findings illustrate how generative AI is being weaponized to dehumanize and incite violence against Muslims, echoing previous practices but with greater credibility and precision.

Researchers categorized the images into four main themes: conspiratorial Islamophobic narratives, exclusionary and dehumanizing rhetoric, the sexualization of Muslim women, and the aestheticization of violence. Among these, images depicting gendered Islamophobia received the highest level of engagement, indicating that Muslim women face unique and heightened threats in this context. The report points out that these images often portray Muslim women in submissive roles, reinforcing harmful stereotypes and misogyny as part of a broader Islamophobic agenda.

Furthermore, the study notes the disturbing trend of employing artistic styles, such as those reminiscent of Japanese animated films, to depict violence or mockery. Such stylistic choices can sanitize brutal incidents, making them appear more palatable and contributing to the normalization of violence against minority groups. This visual propaganda contributes to a growing climate of fear and exclusion within Indian society.

The implications of this trend extend beyond mere representation; the report warns that it accelerates the dehumanization of religious minorities and undermines constitutional protections. The global nature of this phenomenon is echoed in findings from other regions, such as the United States, where generative AI has similarly been used to propagate hate and misinformation.

As these technologies continue to evolve, the challenge of distinguishing between truth and deception will become increasingly difficult. Experts emphasize the importance of addressing the misuse of generative AI to propagate harmful rhetoric and incitement to violence. Calls for social media companies to regulate the use of these technologies have become more pronounced, highlighting the urgent need for intervention to prevent the further amplification of hate against marginalized communities.